From Jekyll to a self-hosted Ghost CMS instance

Last summer when I began thinking of starting my blog, I didn't have a clear idea of how to proceed.

I've had my fair share of blogging back when I was a teenager on WordPress and later on as a freelance videogames journalist for one of the top web Italian magazines in the early 2010s, but the idea of a serious personal blog never occurred to me, especially when I started coding for a living. Whenever I started thinking about it the imposter syndrome always took the wheel, and I felt like my content wasn't interesting enough compared to what's being posted every day by pros who are actively publicizing it on Reddit/LinkedIn/wherever.

At some point, I decided I wanted to finally share something as well and to do that I wanted something pretty much ready and that could be set up without hassle. Static pages seemed like a good idea, so I first considered Hugo and later on moved to Jekyll which was the platform I shipped the first version of my site with. They both are great because there are many platforms around that make deployment a no-brainer, thanks to built-in integrations with GitHub that generate a version of the website at every commit that can be easily deployed to production with a click.

When I went for Jekyll I was also looking for a theme that wasn't necessarily minimalist or ultra-simple, and after digging around I stumbled upon Jasper2, which was the port of the Casper theme from Ghost CMS. Seeing what Ghost CMS was, threw a door open, as it had many cool integrations and I wanted a WYSIWYG editor - which is unlike me as I love command line and raw editors for almost everything. I didn't however want to switch to a managed publishing platform, mostly because I didn't know how much effort I was going to put into the whole blog plan, not knowing if it was worth it.

So, the first setup of niccoloforlini.com was quite simple and consisted in:

- Google Domains as the registrar. I wanted to go with Cloudflare but buying domains was not widely available yet there, and the same went for routed emails that were still an unannounced feature for the platform.

- Jekyll was the static site generator I was using. Sure, I'm not a big fan of Ruby as I had to fiddle with it at work, but with a few additional plugins and custom changes I squeezed in to make it work properly I was ready to write stuff in a fair short amount of time. Granted, I spent a lot of time figuring out that a DNS misconfiguration was messing with the social cards preview, which eventually turned out just fine.

- VS Code and GitHub were my writing companions: the former to help me write decent markdown with real-time rendering, and the latter to store everything I had written up to that point without having countless of

txtfiles laying around. - Vercel provided a fast and effortless integration that helped me deploy the site in a matter of seconds with close to zero configuration. Again, I was surprised by how simple it was!

- To keep track of visitors I was using Plausible, which was the solution I went for as I didn't want to deal with cookie bars, consents, or to be intrusive with unnecessary trackers. I just wanted to know how many unique users visited my blog and which pages they read, nothing else.

This combination worked well and I was quite satisfied.

A few weeks later the first article was ready, and after proofreading it over and over I published a few notes on how I integrated Slack and Bitrise at work with a custom bot to simplify the QA workflow for my fellow team members. It had a lot more success than I expected, probably thanks to some reshares, and seeing how well it was perceived made me consider putting more effort into sharing experiences and discoveries I made during both my professional career and time off.

Time to re-do everything from scratch? 🤯

Even though I didn't have much time to dedicate to the blog (as you can notice by the lack of posts until the past weeks), I was still keeping my eyes on Ghost and eventually found out that the platform itself can be self-hosted!

Having refined during the past few months my DevOps-wannabe skills thanks to the internal set of microservice I maintain for our team (we have dozens of services that go from bots, a Jenkins cluster, some custom runners for GitHub Actions, and much more!), made me start a side project of the side project: self-hosting my website, taking care of development, deployment and maintenance/updates with alerts and anything else that could help. To be fair this was also largely influenced by the fact that I spend a lot of time while I commute lurking r/selfhosted and r/homelab on Reddit, which are some fantastic resources if you're looking to learn how to host services on your own.

So I made a shopping list, and as always I looked for the best choices that gave me great value for the bucks spent.

Step 1 - Switching domain registrar 🔃

For my domain, I finally moved it to Cloudflare: other than offering cheaper prices for renewal, the free plan provides a lot of stuff such as DDoS protection, a wildcard SSL certificate, and caching (even offline). This operation didn't imply many changes as I just migrated the previous DNS records from Google Domains to Cloudflare.

Step 2 - A dedicated instance to play with 🖥️

I deployed a 4 oCPU / 24 GB RAM instance on Oracle Cloud thanks to their generous always free tier. The only downside was that it is an ARM instance so some stuff didn't work right off the bat, but I was fine for some tweaking to get it running. The free plan also always comes with 2 additional micro instances with way less computing power, which I used to migrate my Plausible instance to.

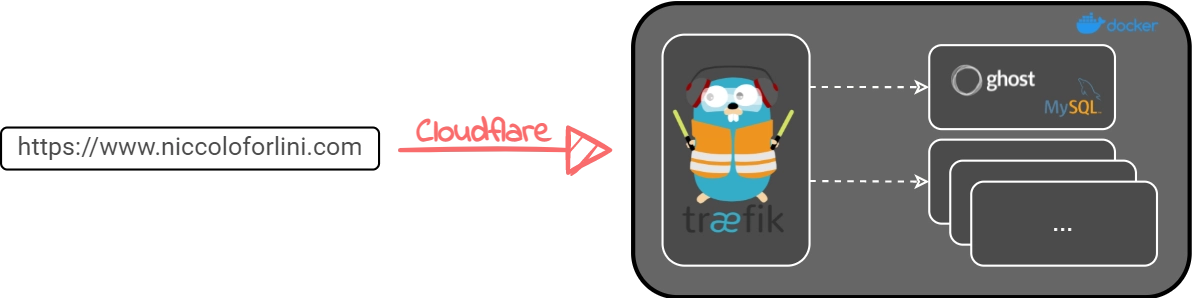

The main ARM instance currently has some Docker containers running, including:

- Ghost CMS + a dedicated MySQL instance, which were the main purpose of having the Docker daemon running

- Traefik for reverse proxying and SSL management. I've always worked with nginx and certbot to get SSL certificates and - while I don't know if I was taking the long road there - setting up everything at the beginning just for HTTPS and reverse proxies felt extremely tedious to me. Although Traefik and nginx have some differences, especially when it comes to features, with Traefik I just had to fiddle a bit with TOML files and container labels to make it work

- WatchTower to keep the images updated, with particular attention to semantic versioning and night schedule just to make sure nothing breaks in the middle of the day, along with a Shoutrrr integration to get a Telegram message on a custom channel I set up to I know what's going on when some event occurs

This wasn't of course enough. Sure, I had everything self-hosted but I had close to no backup strategy, so in case of issues to my VM it would have been a disaster.

Step 3 - Automatic backups and disaster recovery 💥

While Oracle offers a quite solid backup plan even for free tiers (basically for the whole disk volume which is ~150GB), over the years I learned the hard way that having a single point of failure eventually ends up being a recipe for disaster. I was - and still am - however lazy, and exporting backups somewhere manually wasn't something I was up for. This sounded like a great moment to set up a CI worker to do the stuff for me!

Another field I've been playing around with lately is GitHub Actions. Having worked and set up many CI/CD systems for a few years now - being them managed or set up from scratch - I can tell I'm really happy with its current state. It sure lacked a lot of features when it first came out, but slowly, with the advent of additions like reusable workflows, for example, it has become pretty solid and I know several realities using it as their daily driver.

The operations that would be performed by this runner would have been:

- Connect via SSH to the Oracle instance

- Perform some sort of backup (both of the Docker container and the DB), and compress it

- Upload it to an S3 versioned bucket

- Notify the outcome of the operation with a message on Telegram

The entire procedure takes at most two minutes, and the GitHub free plan provides a fair amount of free minutes (~2000) per month. Once again, this was too easy as I wanted to have full control over it and also learn how to deploy a self-hosted runner that could be used across all my repositories (which is great when you have to run CI checks on Rust code as it might take a while).

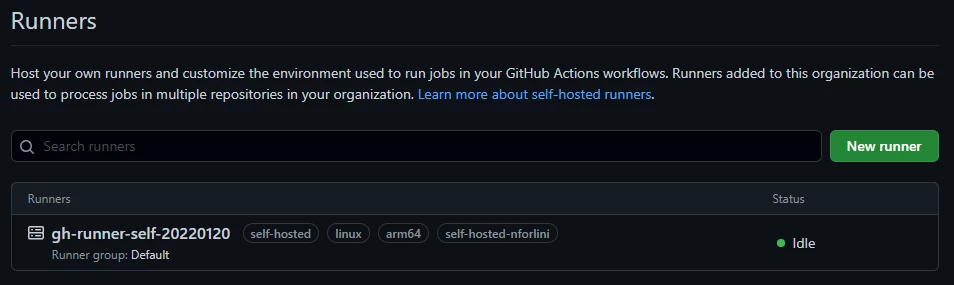

To do that, I created a private GitHub organization - with myself being the only member - where I moved the private repositories I wanted to use the self-hosted runner with. The process of deploying it was pretty easy as it takes probably a couple of minutes in total.

Fast forward, there is it, in the dashboard, waiting for jobs to be dispatched:

The yml file to define the workflow it has to run is not that elaborate, but a few points were critical to me, especially at the beginning when I was trying to figure out how to make it work (SSH and SCP were not a walk in the park within the runner without the use of third party actions).

name: Backup Ghost and MySQL

on:

workflow_dispatch: # Allows triggers on demand, even via HTTP calls

schedule:

- cron: "0 2 * * *" # Run daily at 2 AM UTC

jobs:

backup:

runs-on: self-hosted-nforlini # The name of my self hosted runner

container: alpine # Let's run the job inside a container

steps: # All the ssh/scp/tar/aws-cli commands neededThe s3-backup workflow just performs the backup at 2 AM UTC and uploads it to an S3 bucket (in my case Scaleway), but to make sure it runs I added another workflow that gets triggered as soon as the backup completes, regardless of the outcome.

name: Notify status via Telegram

on:

workflow_run: # This ensures that this workflow runs after the below has completed

workflows: ["Backup Ghost and MySQL"]

types: [completed]

jobs:

notification:

runs-on: self-hosted-nforlini # Same as before

container: alpine # Containerized

steps: # Just one step that sends a Telegram message with title + status

- name: Shoutrrr

uses: containrrr/shoutrrr-action@v1

with:

url: ${{ secrets.SHOUTRRR_URL }}

title: GH Actions - niccoloforlini.com backup | ${{ github.event.workflow_run.conclusion }}

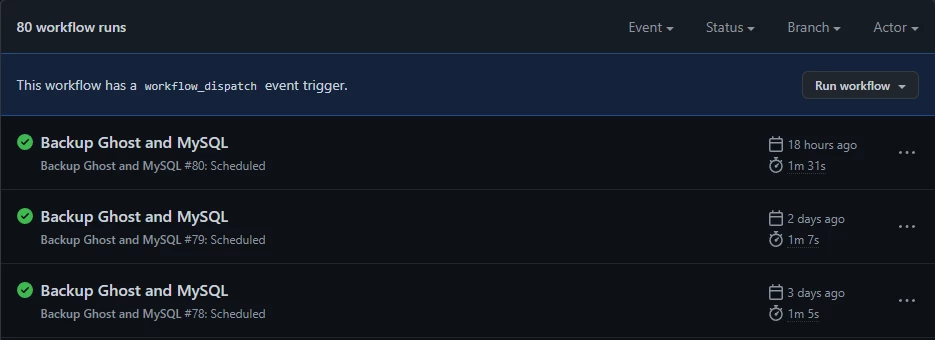

message: More info at ${{ github.server_url }}/${{ github.repository }}/actions/runs/${{ github.event.workflow_run.id }}Et voilà, every day at ~3 AM local time (with a bit of delay because of this) the workflow runs:

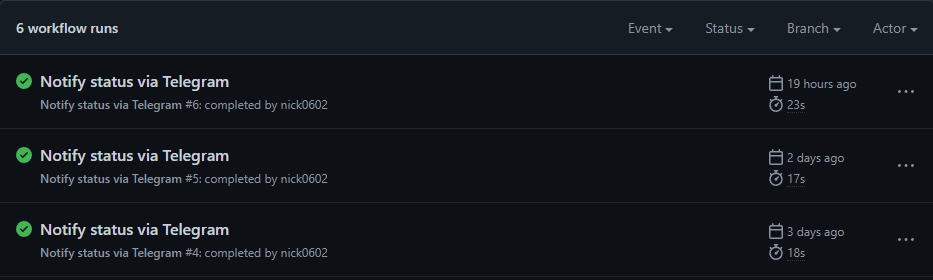

And the second workflow is triggered as well to notify the outcome:

This way, every night I get a notification on a private channel on Telegram that tells me if the backup has been completed with either a success or a failure, so if stuff happens I'm a few clicks away from triggering another workflow that destroys the existing Ghost CMS and recreates it from the last working backup. The backup operation also runs at least two hours before Watchtower performs checks and updates the Docker containers, so I always have the most updated data at hand in case of failed migrations.

Also bonus point: do you remember the workflow_dispatch directive from the first yml file? Since I can use it to perform backups on-demand directly from the repo and via HTTP requests, I'm planning on expanding the bot that I currently use to post notifications to run the workflow directly from the Telegram chat thanks to Teloxide in case I need to. It's not like I really need it, but a Telegram bot written in Rust has always intrigued me and this seems like a good reason to start.

That's it for now! Over the next months, I'm planning to use this new VM to keep working on the blog - the first on the list are replicas and zero-downtime deployments when updates are performed on the Docker images - and also as a host for some of the projects I've been developing on and off throughout the past year. Hopefully, there's something interesting to share that might come out of it. 🚀